Indexes is a big topic when it comes to SQL Server. I’m going to try to tell you the most basics about it. Hopefully this will help you figure out when and why you should use indexes. First, lets start with the real basics!

Go get some candy, coke and coffee. This will probably be the longest blog I’ve done so far. BUT PLEASE KEEP READING! In SQL Server you’ve got tables which stores data. In my example I have a table called Persons. This will have 5 records. In management studio there’s a VERY NICE FEATURE called “Include Actual Execution Plan”. I’ll use this to show you.

USE tempdb

CREATE TABLE Persons (

PersonID VARCHAR(8) NOT NULL,

FirstName VARCHAR(50) NOT NULL,

LastName VARCHAR(50) NOT NULL,

EMail VARCHAR(100) NULL,

ReverseEMail AS REVERSE(Email)

)

INSERT INTO Persons

(PersonID, FirstName, LastName, Email)

VALUES

('StolJens', 'Jens', 'Stoltenberg', 'jens@stortinget.no'),

('SolbErna', 'Erna', 'Solberg', 'erna@stortinget.no'),

('JensSiv', 'Siv', 'Jensen', 'siv@stortinget.no'),

('SponLars', 'Lars' ,'Sponheim', 'lars@stortinget.no'),

('NordVida', 'Vidar', 'Nordnes', 'vidar@omega.no')

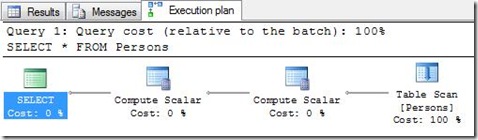

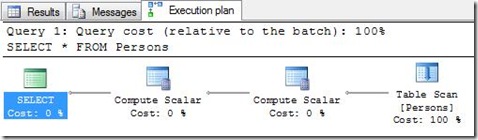

If I now do a SELECT * FROM Persons, everyone knows the results, but here’s the execution plan:

As you see, it’s doing a Table Scan which takes 100% of the cost of this query. A table scan is literally a scan through the whole (or in some cases, parts) of the table. The compute scalar items are there because I’ve got a computed column (ReverseEMail) in my table. We’ll get back to this later.

A table scan is in most cases VERY inefficient, because it has to go through all records with data. If this table had 100.000 records, it would have to go through 20MB of data (8+50+50+100 * 100.000 bytes). Of course, when doing a SELECT * from a table without a where clause, there is no other way than scan the whole table, because you need all data to be returned anyways.

There’s two kinds of indexes in SQL Server. Clustered and non-clustered. Non-clustered is in some ways a table for the table which only stores the values for the indexes columns. In the index there’s also added a rowid field which points to the correct record in the actual table. This way, when seeking the index, it can point you to the correct row in the table after it’s finished seeking. In 2005 you can have up to 249 non-clustered indexes, but in 2008 you can have up to 999! But before you start creating 999 indexes, be aware that indexes also contains data! If you index a column that is a integer, which is 4 bytes, it will be 4 bytes in the table and 4 bytes in the index PER RECORD. So, if you add 999 indexes, this will be 4 * 1000, which is 4000 bytes PER RECORD.

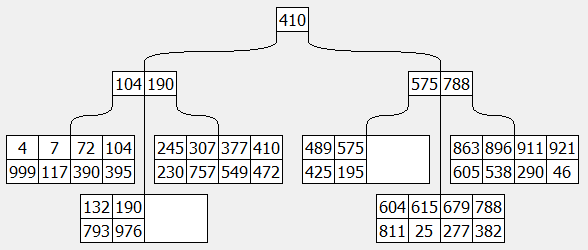

The other type of index is a clustered index. This is the physical (or actually logical, but just think of it as the physical) ordering on the harddisk. So, for instance when I add a clustered index on the PersonID field, it will order the table based on the PersonID field. The clustered index will have the other fields as datapages in the index, but the indexed field (PersonID) will be on the leaf node. I’m not going in to details about this, but if you’re interested, have a look at the definition of a B-tree. Anyhow.. Since the clustered index is the physical ordering of the actual values, so therefore a table can only have ONE clustered index. NB! If you have a clustered index on a table, you’ll never get a Table Scan. You’ll get a Clustered Index Scan, which is the same.

If we think of a table as a book, we’ve got the clustered index in front (Table of contents) of the book, having a sorted list (by chapters 1, 2, 3) of the content with pointers to the actual data (the page of the book). At the end of the book, you’ll find the non-clustered index. This is a index on the “word” field in our table. The word is sorted ascending with a pointer to the page, so the word “Assembly” might be found in pages 15, 19 and 333, and the word “Binary” might be found at page 16.

So, now we’re adding a clustered index to the PersonID field, and a non-clustered on the LastName column. This makes searching on LastName faster for the server, because instead of using a table scan (or clustered index scan), it can use the index to look up the values.

ALTER TABLE Persons

ADD CONSTRAINT PK_Persons

PRIMARY KEY CLUSTERED (PersonID)

CREATE NONCLUSTERED INDEX IX_Persons_LastName ON Persons (LastName)

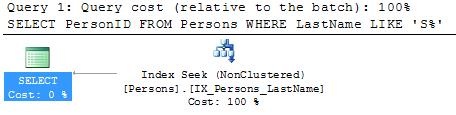

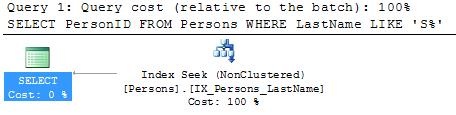

SELECT PersonID

FROM Persons

WHERE LastName LIKE 'S%'

Before taking a look at the execution plan for the select statement, let’s guess what it will do. First it will look at the Persons table to see if there’s any indexes on LastName. There is (IX_Persons_LastName). It will seek this for ‘S%’ and then do a lookup to the clustered index to get the PersonID.

TRICKED YOU! 😀 It’s only using a Index Seek on IX_Persons_LastName. But, where does it get the value of PersonID? From IX_Persons_LastName, of course. The reason is that if you have a table with no clustered index, it will add a RowID field to the table, and use this in all non-clustered indexes to keep them “in sync”. But, if you do have a clustered index, it will use this field (PersonID) as the RowID. That’s why it’s only using the non-clustered index.

Now, let’s do another query:

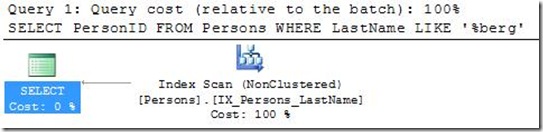

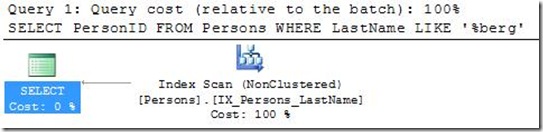

SELECT PersonID

FROM Persons

WHERE LastName LIKE '%berg'

We’re only getting the PersonIDs for those that have a LastName ending with “berg”. If we look at the execution plan, we’ll see that now it’s using a Index Scan, instead of an Index Seek

Index scan is just as bad as a table scan. It has to search the whole index to find the records it’s looking for. Let’s take the book as an example again. Find a book, go to the index in the back of the book and try to find all words ending with “ing”. If your book of choice is a computer book, you’ll eventually find at least “designing”, “hacking” and “implementing”. This will take some time. If you then try to find all words starting with C, you know where to start, and where to end. You know that you have to start after the last word that starts with B, and you know you have to stop when you hit D. This is the difference between a seek and a scan. But, what if you often have to find things that ENDS with something? Do you always have to do a index scan? No. As you might remember, I added a field called ReverseEMail to the table AS REVERSE(Email). This is a computed, not persisted field. This means it’s computed on the fly, and therefore not stored to the actual database. If we add an index to this field:

CREATE NONCLUSTERED INDEX IX_Persons_ReversedEmail

ON Persons (ReverseEMail) INCLUDE (FirstName)

SELECT FirstName

FROM Persons

WHERE ReverseEmail LIKE REVERSE('%stortinget.no')

SELECT LastName

FROM Persons

WHERE ReverseEmail LIKE REVERSE('%stortinget.no')

SELECT LastName

FROM Persons WITH (INDEX(IX_Persons_ReversedEmail))

WHERE ReverseEmail LIKE REVERSE('%stortinget.no')

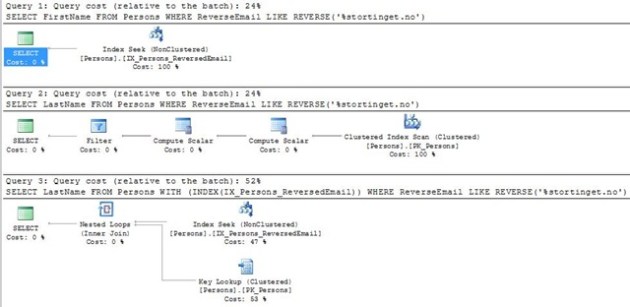

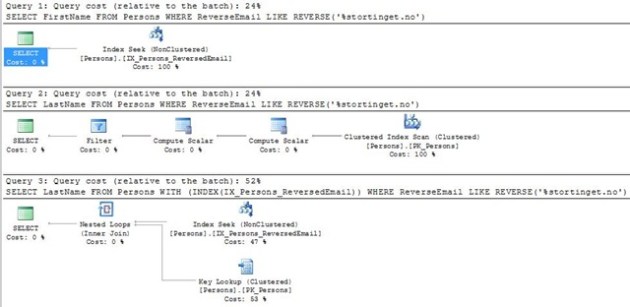

Now let’s have a look at the execution plan again for these three select statements:

Notice when I’m creating the index I’m doing it on the ReverseEMail field, but I’m also including the FirstName. INCLUDE was introduced in SQL Server 2005. This means that it includes the data for this field in the datapage, but not in the leaf node. In other words, you can’t filter on it but you can select it out of it.

In the first query you see it’s only using a index seek on IX_Persons_ReversedEmail, because it’s got everything it needs in that index. In the second query we select LastName instead of FirstName, which is NOT included in the index. Then the query optimizer figures out it’s faster to do a clustered index scan instead of using the index we created, because it needs to go in to this anyways to get the LastName. If we had more records it would probably not do this clustered index scan. In the third query I’ve used a table hint where I’m forcing query optimizer to use the index I created. Then it’s doing a Index Seek on the ReverseEmail field, and then doing a key lookup per record to the clustered index. As you see from the Query cost (relative to the batch) this is 52%, compared to 24% for the others. That means that even though you might think it’s quicker to use the index we just created, it’s actually not. Be aware, although all reasonable logics says that you’re correct, SQL Server might take an alternative route. When you execute a query, it uses a couple of milliseconds to calculates the best way to execute this query. If your statistics are up to date (I might write a post about statistics later), it’s very likely that SQL Server will be better than you to pick indexes to use 😉 So, keep your statistics updated and do not mess with table hints unless you really know what you’re doing! If you are interested, I’ll write a post about statistics, and one more detailed about execution plans. Please let me know!

So, to our conclusion. SQL Server IS smarter than you when it comes to picking the best execution plan. GET USED TO IT! 🙂 Create indexes on fields that are often used for filtering. If you use GROUP BY or ORDER BY, you should consider adding a clustered index to that (or those) field(s).

Marry Christmas everyone!